The Math Behind Data Science Interviews

- Dr Dilek Celik

- Jul 17

- 5 min read

Over the years, in my journey through academia, mentoring, and collaborative projects with hiring managers, I repeatedly heard one consistent observation: many aspiring data scientists who are well-versed in using modern tools often lack a strong grip on the underlying mathematical foundations. Despite their comfort with libraries like pandas, TensorFlow, or scikit-learn, they struggle to explain the logic behind model behavior. It turns out that a deeper understanding of mathematics—particularly statistics, linear algebra, calculus, and probability—is what separates the good from the great.

This isn’t simply about knowing theory for theory's sake. The mathematical mindset is what helps translate business problems into data problems, assess uncertainty, validate results, and ultimately build better models. With that realization, and after learning from professionals who have led hiring processes at top-tier institutions and companies, I decided to put together a roadmap of key math concepts that kept coming up in their conversations. These principles don’t just help during interviews—they form the bedrock of real-world problem solving.

The aim of this article is to give a structured overview of these core mathematical ideas, along with illustrative Python code to make the material hands-on. Whether you're preparing for an interview, upskilling, or revisiting foundational concepts, this post is designed to help you become more confident in your mathematical intuition.

1. 📊 Statistical Thinking: Building Intuition from Data

Statistics is where many professionals notice room for improvement in new hires. It helps you draw insights from data, identify relationships between variables, and validate hypotheses. The three main areas covered here are:

Descriptive Statistics

Inferential Statistics

Sampling

For example, in interviews, you might analyze patterns in data (descriptive statistics) or estimate population properties using samples (inferential statistics).

1.1 Descriptive Statistics: Start with the Basics Math

Every interview begins with understanding the data. The ability to compute and interpret measures such as mean, median, and standard deviation is fundamental. These tell you about the central tendency and dispersion in the dataset.

# Import required libraries

import numpy as np

import pandas as pd

# Create a sample dataset

data = {'Values': [10, 12, 15, 20, 22, 25, 30, 35, 40, 50]}

# Convert to a DataFrame

df = pd.DataFrame(data)

# Compute key metrics

mean = df['Values'].mean()

median = df['Values'].median()

std_dev = df['Values'].std()

print(f"Mean: {mean}")

print(f"Median: {median}")

print(f"Standard Deviation: {std_dev}") Output:

The code calculates basic descriptive statistics, helping summarize the dataset for better understanding. A common technique here is Univariate Analysis, which examines a single variable’s properties (mean etc.).

In interview scenarios, candidates are often asked to explain skewness, kurtosis, or how to handle outliers. These stem directly from descriptive statistics and impact modeling choices.

1.2 Inferential Statistics: From Sample to Population

Many interviews include questions about confidence intervals and hypothesis testing. This allows you to make population-level inferences using smaller samples. Interview questions often test confidence intervals or hypothesis testing. Below, we compute a 95% confidence interval for a sample mean:

import numpy as np

import scipy.stats as stats

# Generate synthetic data

np.random.seed(0)

sample_data = np.random.normal(loc=20, scale=5, size=100)

# Compute confidence interval

confidence = 0.95

sample_mean = np.mean(sample_data)

std_error = stats.sem(sample_data)

conf_interval = stats.t.interval(confidence, len(sample_data)-1, loc=sample_mean, scale=std_error)

print(f"Mean: {sample_mean}")

print(f"95% Confidence Interval: {conf_interval}") Output:

The results suggest the true population mean likely falls between 19.29 and 21.30.

For exploring relationships between multiple variables, Multivariate Analysis is invaluable. Understanding how to interpret this interval is key. For example, if the confidence interval excludes a certain value (e.g., a control group mean), it may indicate statistical significance.

1.3 Sampling Techniques

Sampling is critical—professionals stress that many candidates overlook its nuances. A "random sample" isn’t always optimal. Key methods include:

Simple Random Sampling (SRS): Every member has an equal chance of selection. However, imbalances (e.g., age groups) can skew results.

Stratified Sampling: Divides the population into subgroups (strata) for proportional representation.

Systematic Sampling: Selects every n-th member after a random start.

Here’s an SRS example:

import numpy as np

# Define a population

population = np.arange(1, 1001)

# Take a random sample

sample = np.random.choice(population, size=10, replace=False)

print("Random Sample:")

print(sample) Output:

The code randomly picks 10 values without bias.

2. 🔧 Calculus: Foundations of Model Training

Calculus underpins optimization in machine learning, helping you understand how input changes affect outputs.

2.1 Derivatives

Many machine learning algorithms revolve around optimization. Understanding derivatives helps you visualize how functions change and how models learn. They’re crucial for optimizing models.

Example:

import sympy as sp

# Define a function

x = sp.Symbol('x')

f = x**2 + 2*x + 1

# Compute its derivative

derivative = sp.diff(f, x)

print("Derivative:")

print(derivative) Output:

The derivative (2x + 2) indicates the function’s slope at any point.

When asked to explain gradient descent, candidates are often expected to derive loss functions or explain the derivative's role in finding minima.

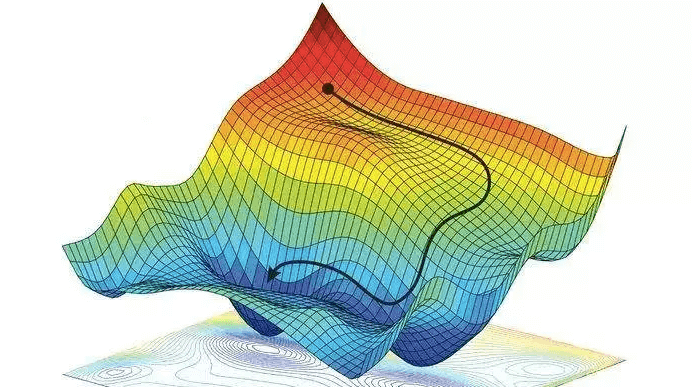

2.2 Gradient Descent and Optimization

This algorithm minimizes loss functions by iteratively adjusting parameters. Below, we find the minimum of

f(x) = x² + 2x + 1:

import numpy as np

import matplotlib.pyplot as plt

# Define the function and its derivative

def f(x):

return x**2 + 2*x + 1

def df(x):

return 2*x + 2

# Gradient descent setup

x_start = 5

learning_rate = 0.05

iterations = 50

# Perform optimization

x_values = [x_start]

for _ in range(iterations):

grad = df(x_start)

x_start -= learning_rate * grad

x_values.append(x_start)

# Plot the results

x_range = np.linspace(-6, 4, 100)

plt.plot(x_range, f(x_range), label='f(x)')

plt.scatter(x_values, [f(x) for x in x_values], color='red')

plt.title('Gradient Descent Path')

plt.xlabel('x')

plt.ylabel('f(x)')

plt.legend()

plt.show() Output:

The algorithm converges toward the true minimum at x = -1.

A common interview favorite is explaining gradient descent—how iterative parameter updates lead to convergence. This type of question tests both your calculus understanding and how you apply it programmatically.

3. 📐 Linear Algebra: Geometry of Data and Models

3.1 Vectors and Matrices

Vectors and matrices form the backbone of ML models like neural networks. Key operations include multiplication, transposition, and inversion.

import numpy as np

# Define a vector

vector = np.array([1, 2, 3])

# Define a matrix

matrix = np.array([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

print("Vector:")

print(vector)

print("Matrix:")

print(matrix) Output:

3.2 Matrix Operations

Matrix multiplication, transposition, and inversion underpin many ML models:

# Matrix multiplication

A = np.array([[1, 2], [3, 4]])

B = np.array([[5, 6], [7, 8]])

C = np.dot(A, B)

# Transposition

A_transposed = A.T

# Inversion

A_inv = np.linalg.inv(A)

print("Multiplication:")

print(C)

print("Inverse:")

print(A_inv) Output:

Multiplying a matrix by its inverse yields the identity matrix (with minor rounding errors).

Understanding these operations prepares you for algorithms like Linear Regression, PCA, and Neural Networks.

3.3 Eigenvalues and Eigenvectors

These are vital for dimensionality reduction (e.g., PCA).

A = np.array([[3, 1], [1, 3]])

eigenvals, eigenvecs = np.linalg.eig(A)

print("Eigenvalues:")

print(eigenvals)

print("Eigenvectors:")

print(eigenvecs) Output:

Explaining what these values mean geometrically (e.g., directions of maximum variance) can impress interviewers.

4. 🎲 Probability: Quantifying Uncertainty

Probability helps quantify uncertainty—critical for forecasting and modeling.

4.1 Distributions and Expectations

Most interviews touch on probability distributions, particularly the normal distribution and expected values. The "bell curve" is characterized by: Symmetry around the mean. Mean = Median = Mode. 68-95-99.7 Rule: ~68% of data falls within ±1 standard deviation.

import matplotlib.pyplot as plt

data = np.random.normal(0, 1, 1000)

plt.hist(data, bins=30, density=True)

plt.title('Normal Distribution')

plt.show() Output:

Being able to explain why a normal distribution appears so often (Central Limit Theorem) is a good sign of depth.

4.2 Bayes’ Theorem and Conditional Probability

This updates probabilities based on new evidence: P(A|B) = [P(B|A) * P(A)] / P(B)

Example: Adjusting the probability that someone owns a Tesla after learning their age.

# Bayes Theorem Illustration

# P(A|B) = [P(B|A) * P(A)] / P(B)

p_disease = 0.01

p_positive_given_disease = 0.99

p_positive_given_healthy = 0.05

p_healthy = 1 - p_disease

p_positive = (p_positive_given_disease * p_disease) + (p_positive_given_healthy * p_healthy)

posterior = (p_positive_given_disease * p_disease) / p_positive

print(f"Probability of disease given a positive test: {posterior:.4f}")

This kind of reasoning is useful in classification problems, spam filtering, and fraud detection.

🧠 Conclusion: From Theory to Practice

As I absorbed these insights from mentors, hiring managers, and professionals deeply embedded in data science recruitment, I realized how often these fundamental mathematical concepts surfaced during technical interviews.

They are not about solving obscure equations or performing long-hand derivations. Rather, they demonstrate whether a candidate understands the mechanics behind what they're doing.

Interviewers aren’t just testing knowledge—they’re looking for someone who can think like a data scientist. That means being able to reason probabilistically, spot patterns geometrically, optimize effectively, and interpret results statistically.

If you're preparing for a role in data science or machine learning, spend time with these ideas. Understand the intuition behind them. Code them out. Visualize them. This blend of theory and application is what top professionals recognize as essential.

If this post resonated with you or helped you prepare better for interviews, feel free to share it or reach out! I often write about the intersection of data science, education, and real-world applications.

Comments